The second and previous blog in this six part series (@ http://www.vamsitalkstech.com/?p=4670) discussed technical challenges with running large scale Digital Applications on traditional datacenter architectures. In this third blog, we will deep dive into another important ecosystem platform – Apache Mesos, a project that aims to abstract away various system resources – CPU, memory, network and disk resources to provide consuming digital applications with a giant cluster from which they can utilize capacity – a key requirement of the Software Defined Datacenter (SDDC). The next blogpost will deep dive into Linux Containers & Docker.

Introduction and the need for Apache Mesos..

This blog has from time to time discussed how Digital applications are a diverse blend of several different and broad technology paradigms – Big Data, Intelligent Middleware, Messaging, Business Process Management, Data Science et al.

To that end almost every Enterprise Datacenter supporting Digital workloads typically has clusters of multi-varied applications installed. Most traditional datacenters have used either physical or virtual machines (VMs) as the primary runtime unit to run such applications. These VMs are typically provisioned based on application asks and have applications deployed onto them. These VMs then are formed into logical clusters which are essentially a series of machines serving a given business application in an n-tier architecture.

As load increases on these servers, more VMs are provisioned into the cluster and so on. The challenge with this traditional model is that it is fairly static in nature in the sense that machines are preallocated to run certain kinds of workloads (databases, webservers, developer servers etc). The challenge with Digital and Cloud Native applications are that scaling needs to happen dynamically and applications think of the infrastructure as being infinite. These applications present various challenges and headaches that call for the Datacenter to be software defined as we discussed in the last blog below. We will continue our look at the SDDC by considering one of the important projects in this landscape – Apache Mesos.

Why Digital Platforms Need A Software Defined Datacenter..(2/7)

Apache Mesos is a project that was developed at the University of California at Berkeley circa 2009. While it was initially created to solve the challenge of provisioning and scaling Spark clusters, the Mesos project evolved to become a centralized cluster manager. The central idea of Mesos is to pool together all the physical resources of the cluster and making it available as a single reservoir of highly available resources for different applications (or frameworks) to consume. Over time, Mesos has begun supporting complex n-tier application platforms that leverage capabilities such as Hadoop, Middleware, Jenkins, Kafka, Spark, Machine Learning etc.

As with almost all innovative Cloud & Big Data projects, the adoption of Apache Mesos has primarily been in the web scale arena. Prominent users include highly technical engineering shops such as Twitter, Netflix, Airbnb, Uber, eBay, Yelp and Apple. However, there seems to be early adopter activity with increased acceptance in the Fortune 100. For instance, Verizon signed on in 2015 to use a Mesosphere DC/OS (based on Apache Mesos) for datacenter orchestration.

The Many Definitions of Mesos..

At it’s simplest, Mesos is an Open Source Cluster Manager. What does that mean? Mesos can be described as a cluster manger because it ensures that datacenter hardware resources are managed and advantageously shared among multiple distributed technologies – Big Data, Message Oriented Middleware, Application Servers, Mobile apps etc. Mesos also enables applications to scale with a high degree of resiliency, without having to bother about details of the underlying infrastructure.

The model of resource allocation followed by Mesos allows a range of constituents sys-admins, developers & DevOps teams to request resources (CPU, RAM, Storage) from a cloud provider.

Mesos has alternatively been described as a Datacenter Kernel as it provides a single unified view of node resources to software frameworks that wish to consume them via APIs. Mesos performs the role of an Intelligent global level scheduler that can match a massive pool of hardware resources to distributed applications that want to consume these resources. Mesos aggregates all the resources into a large virtual pool using not just virtual machines and containers but primitives such as CPU, I/O and RAM. It also breaks applications into small units that can be assigned across this pool. Mesos also provides APIs in multiple languages to allow applications to be built for it. Apache Spark, the most popular data processing engine, was built originally as a Mesos framework.

It is also called a Data Center Operating System (DCOS) as it performs a similar role to the operating system. Any application that can run on Linux runs on Mesos.

To illustrate how Mesos works. Consider two clusters in a datacenter – Cluster A and Cluster B. Cluster A has 8 nodes with each node/server possessing 4 CPUs and 64 GB RAM; Cluster B has 5 nodes with each node/server having with 4 CPUs and 64 GB RAM. Mesos can essentially combine both these clusters into one virtual cluster of 52 CPUs and 832 GB RAM. The advantage of this approach is that cluster usage is greatly improved because applications share resources much more efficiently.

Mesos and Cloud Native Applications..

We discussed the differences between Cloud Native and legacy applications in the previous post @ http://www.vamsitalkstech.com/?p=4670 . Mesos has been impactful when running stateless Cloud Native applications as opposed to running traditional applications which are built on a stateful/ vertical scaling paradigm. While the defining features of Cloud Native applications are worthy of a dedicated blogpost, these applications can scale to handle massive & increasing amounts of load while tolerating any failure without impacting service. These applications are also intrinsically distributed in nature and are typically composed of loosely coupled microservices. Examples include – stateless web applications running on a Platform as a Service (PaaS), CI/CD applications working on Jenkins, NoSQL databases like HBase, Cassandra, Couchbase and MongoDB. Stateful applications that persist data using a RDBMS to disk aren’t good workloads for Mesos as yet.

When Cloud Native Digital applications are run on Mesos, several of the headaches encountered in running these on legacy datacenters are ameliorated, namely –

- Clusters can be dynamically provisioned by Mesos based on demand spikes

- Location independence for microservices

- Fault tolerance

As it matures, Mesos has also began supporting multi datacenter deployments with web scale shops like Uber running Cassandra as a framework across datacenters at scale. In the case of Uber, each datacenter has it’s own Mesos cluster with independent frameworks that exchange information periodically. The Cassandra database includes a seed node that bootstraps the gossip process for new nodes joining the cluster. A custom seed provider was created to launch Cassandra nodes which allows new nodes to be rolled out automatically into the Mesos cluster in each datacenter. (Credit – Abhishek Verma – Uber)

Mesos Architecture..

There are three main architectural primitives in Mesos – Master, Slave, Frameworks. The central orchestrator in the Mesos system is called a Master and the worker processes are called Slaves.

As depicted below, the Master process manages the overall cluster and delegates tasks to the slaves based on the resources requested by Frameworks.

The core Mesos process is installed on all nodes and their personality is given at runtime. The Slaves run application workloads that are requested by appropriate frameworks. This overall setup of Master and Slave daemons makes up a Mesos cluster.

Frameworks which are commonly called Mesos applications and are composed of three main components. First off, they have a scheduler which registers with the Master to receive resource offers and then executors which launch workloads or tasks on the slaves. The Resource offers are a simple list of a slave’s available capacity – CPU and Memory. The Master receives these offers from the slaves and then provides them to the frameworks. A task can be anything really – a simple script or a command, or a MapReduce job or an initialization of a Jetty/Tomcat/JBOSS AS etc.

The Mesos executor is a process on the Slave that runs tasks. The executor is a program or command on the slaves which runs the tasks. No matter which isolation module is used, the executor packages all resources and runs the task on the slave node. When the task is complete, the containers are destroyed and the Slaves resources are released back to the Master.

For Master HA, you can run multiple masters with only one Active at a given point communicating with the slave nodes. Once the Hot Master fails, Apache Zookeeper is used to manage leader election to a standby Master as depicted. Master quorum is a minimum of 3 nodes but most production deployments are recommended to have 5 Master nodes. Once a new Master is elected, all of the cluster/slave and framework information is submitted to the new Master by the frameworks so that state before failure can be reconstructed. Mesos has elaborate recovery processes for the frameworks, the schedulers and the Slave nodes.

By some measures, Mesos is a very straightforward concept. Frameworks need to run tasks and they are traffic managed by Masters which coordinate tasks on worker machines called – Slaves.

From a production deployment standpoint, the following components are required – An odd number of Mesos Masters, Many Slave machines needed to run applications, a Zookeeper ensemble for HA configurations and an optional Docker engine running on each slave.

The Mesos Resource Allocation Process..

Mesos follows a default resource scheduling model known as two-tier scheduling. This model may seem a little convoluted but it is important to keep in mind that it was designed to satisfy the requirements & constraints of many different frameworks without having to know details of each.

The Master’s allocation module receives resource offers from slaves which then forwards them on to the framework schedulers. These offers are not just high level in terms of the resources but also how much of these resources to offer. The framework schedulers can accept or reject the Master’s offers based on their current capacity requirements. The Master’s allocation module is customizable based on specific requirements that implementing enterprises may have. The default allocation algorithm is known as Dominant Resource Fairness (DRF) and is based on fair sharing of cluster resources among requesting applications. For instance, DRF ensures that requests are equalized i.e CPU hungry applications are provided a higher share of CPU heavy resources & Memory intensive applications are provided the same fractional amount of RAM.

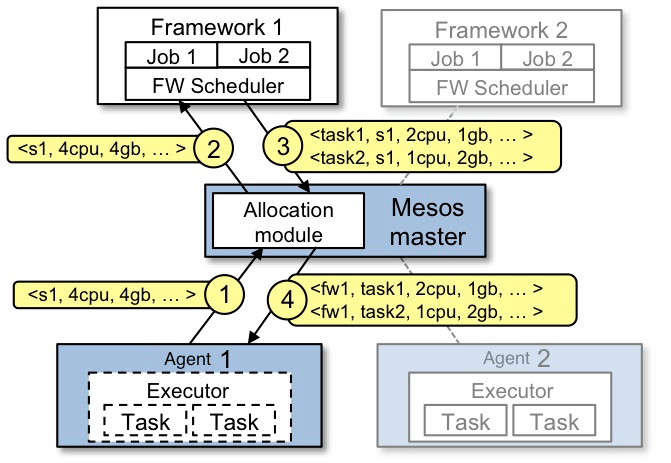

Mesos follows a two level resource allocation policy (Image Credit – Apache Mesos Project Documentation)

To better illustrate the resource allocation method in Mesos, let us discuss the sequence of events in the above figure from the Apache Mesos documentation[1]

- The Slave Node – as depicted, Agent 1 can offer reports to4 CPUs and 4 GB of memory for allocation to any framework that can use it. It reports this available capacity to the master. The allocation policy module offers framework 1 these resources.

- The Master sends a resource offer describing what is available on agent 1 to framework 1.

- The Framework’s scheduler then provides the master withmore information on the two tasks to run on the agent, using <2 CPUs, 1 GB RAM> for the first task, and <1 CPUs, 2 GB RAM> for the second task.

- The master sends the tasks to the agent, which allocates appropriate resources to the framework’s executor, which in turn launches the two tasks (depicted with dotted-line borders in the figure). Because 1 CPU and 1 GB of RAM are still unallocated, the allocation module may now offer them to framework 2.

Mesos integration with other SDDC components – Linux Containers, Docker, OpenStack, Kubernetes etc

As with other platforms we are discussing in this series, Mesos does not stand alone in the SDDC and leverages other technologies as needed and as discussed in the last post (@ http://www.vamsitalkstech.com/?p=4670). However it needs to be stated that Mesos does have overlapping functionality at times with technologies such as Kubernetes and OpenStack.

However, let us consider the integration points between these technologies –

- Linux Containers -Over the last few years, linux containers have emerged as a viable and lightweight alternative to hypervisors as way of running multiple applications on a given OS. Different containers share one underlying OS and perform with less overhead than virtual machines. Given that one of the chief goals of Mesos is to run multiple frameworks on the same set of hardware, Mesos implements what are called isolation modules and isolation mechanisms to achieve its goal of multi-tenency for different applications running on the same hardware. Mesos supports popular technologies for process isolation – cgroups, Solaris Zones, Docker containers. The first two are the default but the Mesos project has recently added Docker as an isolation mechanism.

- Schedulers – There is no single widely accepted definition as to what constitutes a Container Orchestration technology. The tooling to achieve this has become one of the trickiest parts of launching containers at scale discussion with multiple projects attempting to capture this market. The requirement in the case of Mesos is straightforward – frameworks constitute applications which need to make the the most efficient use of hardware. This means avoiding the overhead of VMs and leveraging containers – cgroups or Docker or Rocket etc. Hence Mesos needs to be able to support container orchestration as a core feature. Mesos follows a pluggable model for container orchestration by supporting schedulers like Kubernetes or YARN or Marathon or Docker Swarm. All these tools provide service that organize containers into a clusters and running them on specified servers & overall lifecycle management and scheduling of applications running as containers. At large webscale properties, massive container oriented environments running hundreds of microservices are all being managed with this combination of tools using Mesos.Mesos needs to be able to start and stop services in response to failure conditions etc.

- Private and Public Cloud Infrastructure as a Service (IaaS) Providers– Mesos works at a different layer of abstraction than a IaaS provider such as Openstack and aims to solve different problems. While OpenStack provides provisioned infrastructure across OS, Storage, Networking et, Mesos intends to achieve better cloud instance utilization. Mesos integrates well with Openstack and runs on top of resources offered up by Openstack to run frameworks on them. Mesos itself runs on a Linux instance on an existing OpenStack deployments though it also can simply run on bare metal as well. It simply requires to run a small Linux process on each of the nodes. Mesos is also significantly simpler than OpenStack and it only takes a few hrs if even to get it up and running.

Mesos has also been deployed on public cloud technology with both Microsoft Azure and Amazon AWS. Azure’s container services are built on Mesos. Netflix leverages Mesos extensively on their EC2 cloud and have also written an advanced scheduling library called Fenzo. Fenzo ensures that a first fit kind of assignment is followed where tasks are ‘bin packed’ onto Agents by the requested use of CPU, memory and network bandwidth. Fenzo also autoscales cluster usage based on demand and also spreads tasks of a given job across EC2 availability zones for high availability. [2]With the stage set from a technology standpoint, let us look over at a few real world use cases where Mesos has been deployed in mission critical applications at various Netflix.

Mesos Deployment @ Netflix..

Netflix are one of the largest adopters and contributors to Mesos and they use it across a wide variety of business capabilities. These use cases include real time anomaly detection, data science lifecycle (training and model building batch jobs, machine learning orchestration), and other business applications. These workloads span a range of technical architectures- batch processing, stream processing and running microservices based applications.

Netflix runs their business applications as a collection of microservices deployed on Amazon EC2 and their first use of Mesos was to perform fine grained resource allocation for compute tasks to gain greater unit efficiency on EC2. The first use case for Mesos at large enterprises is typically around increasing the usage and efficiency of elastic cloud services. In Netflix’s case, they needed the cluster scheduler to increase both agent ephemerality as well as autoscale agents based on demand.

Major Application Use Cases –

-

Mantis – Netflix deals with a lot of operational data that is constantly streaming in to their environment. They have a range of use cases on streaming data such as real-time dashboarding, alerting, anomaly detection, metric generation, and ad-hoc interactive exploration of streaming data. With this Mantis is a reactive stream processing platform that is deployed as a cloud native service which focuses on operational data streams. The other goal of Mantis is to make it easy for different development teams to obtain access to real time events and then to build applications on them. The current throughput of Mantis is around 8 million events per second and Apache Mesos is running hundreds of stream-processing jobs around the clock. For certain kinds of streaming applications, this amounts to tracking millions of unique combinations of data all the time.

-

As mentioned above, Netflix runs their Application services stack on Amazon EC2 and most workloads run on linux containers. Netflix created Titus to create a container management platform and to provision Docker containers on EC2. Netflix had to do this as Amazon ECS was not upto par yet as a container orchestration solution for EC2. The use cases supported by Titus include serving batch jobs which help with algorithm training (similar titles for recommendations, A/B test cell analysis, etc.) as well as hourly ad-hoc reporting and analysis jobs. Titus recently added support for service style invocation for Netflix resources that are used to provide consistent development environments and more fine grained resource management.

-

Meson – One of the most important capabilities that Netflix possess is its uncanny ability to predict what movies and shows that its subscribers want to watch based on their previous watching history and similar segmentation data. Netflix excels at personalizing video recommendations and this capability is powered by machine learning algorithms. To ensure that a very large number of machine learning workflow pipelines can be efficiently created, scheduled and managed – Netflix created Meson on top of Apache Mesos. It is critical that for this system to scale and for the algorithms themselves to be fast, reliable and efficient, these pipelines are run over a large cluster of Amazon AWS instances. As depicted below, Meson manages a large number of jobs with differing CPU, Memory and Disk requirements. Once the slaves/agents are chosen, Spark jobs are run on these shared clusters. Meson uses Linux cgroups based isolation. All of the resource scheduling is handled via Fenzo (described above)

Conclusion..

Apache Mesos is a promising new technology which attempts to solve scaling and clustering challenges encountered in the Software Defined Datacenter (SDDC). The biggest benefits of using Mesos are more efficient use of infrastructure across complex applications with native support for multitenant applications. Mesos can ensure that multiple kinds of applications or frameworks can share a given set of nodes. This ensures not just more efficient sharing of hardware but also fault tolerance and load balancing for complex Cloud Native applications.

While, Mesos has had a good degree of adoption in the webscale properties where it was first created (Twitter, Netflix, Uber, Airbnb etc to name the most prominent), it still needs to be proven as a dependable and robust platform in the datacenter.

The next post in this series will explore another exciting technology Docker, the emerging standard in the Linux container space.

References

[1] Apache Mesos Documentation – http://mesos.apache.org/documentation/latest/architecture/

[2] Distributed Resource Scheduling with Apache Mesos at Netflix – Medium.com